[Lecture Review Notes] OpenAI's 5 Steps Towards Artificial General Intelligence (AGI)

Thank you for joining our Happy-Hour-and-Learn session! Now, you have learned the 5 stages of AI Development! And it's NOT the end of that - here are some lecture notes capturing all the key points from the previous hour.

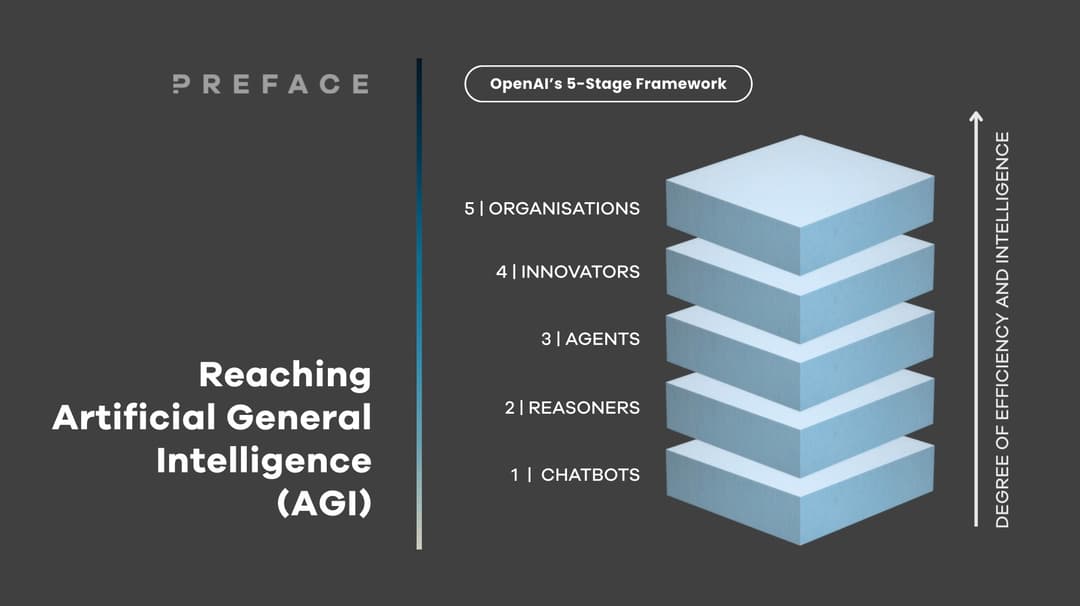

We first provided an overview of how OpenAI, the leading AI organisation that created ChatGPT, envisions a progression towards AGI through five distinct stages:

Conversational AI, focused on language understanding and generation;

Reasoning AI, enabling complex problem-solving and decision-making;

Autonomous AI, acting independently within environments;

Innovator AI, driving new discoveries and technologies; and

Organisational AI, capable of coordinating and optimising complex systems.

These stages represent an increasing level of capability and autonomy in artificial intelligence systems.

ChatGPT as Conversational AI

ChatGPT exemplifies the first stage as conversational AI. It excels at understanding and generating human-like text, making it adept at writing content and engaging in dialogue. It's helpful to think of GPT as TPG, where Transformer is the "input", Pre-trained is the "process," and Generative is the "output."

Prompt techniques like Role-Goal-Context (RGC) and Ask-before-Answer significantly enhance ChatGPT's output. For example, by defining the 'Role', you tailor the AI's persona to fit the task, ensuring it accesses relevant knowledge and understands the nuances of the query. Similarly, 'Ask-before-Answer' forces ChatGPT to seek clarification, refining the quality of the output.

However, ChatGPT’s primary function is to process and respond to input within the context of a conversation, without the capacity for deeper reasoning. Notably, its pre-trained capabilities, also limit the autonomy and intelligence of ChatGPT, making reasoning the necessary next step for advancement.

The Advancement to Reasoning Models

Reasoning models, such as OpenAI's o1 / o3 / o4-mini and DeepSeek R1, mark a significant step beyond conversational AI. These models move towards the second stage of OpenAI’s framework, as reasoning AI obtains more autonomy on logical deductions and are technically designed to analyse complex information and complete intricate tasks (e.g., in-depth research).

We shared a few demos of how generic AI models behave differently as reasoning AI models, mainly focusing on how generic models can only conduct menial tasks like rewriting emails. Unlike ChatGPT or generic POE.com, which relies on learned patterns in language, our demos with reasoning models like DeepSeek R1 and Grok 3 (Think) showed their capability in advanced problem solving and logical deduction before they generate answers.

The Emergence of AI Agents

From reasoning models comes OpenAI's third stage: Autonomous AI, systems acting independently. Unlike the direct conversational loop of many Gen AI applications, AI Agents often employ a more circular approach. They autonomously learn about their context and environment, reason through potential actions, and make decisions to progress towards their goals, often operating independently for a period before reporting back or requiring further user input.

To illustrate this distinction, we demonstrated how AI agents differ from general AI models by showcasing the development of AI-powered agentic workflows using tools like n8n in conjunction with Google Gemini and Perplexity. This demo highlighted how an agent can be set up to monitor information, make decisions based on evolving data, and initiate a sequence of actions—such as research, analysis, and notification—all autonomously.

Beyond Agents – Innovator and Organisational AI

OpenAI's fourth stage, Innovator AI, envisions systems driving new discoveries, not just reasoning. This likely relies on advanced learning like reinforcement learning (RL). Unlike supervised learning (using existing data), RL enables AI self-learning via trial-and-error with incentives, like AlphaZero mastering Go with novel strategies. Innovator AI could self-generate many ideas/ processes from goals. Challenges include agents using “whatever it takes” strategies, misalignment with human values, and hallucination risks, making reliable Innovator AI a future prospect.

The fifth stage, Organisational AI, sees AI coordinating complex systems like an organisation, requiring seamless inter-agent communication. The core idea involves multiple AI agents intercommunicating seamlessly. Crucial frameworks include Anthropic's Model Context Protocol (connecting software and tools to AI) and Google's A2A protocols (direct agent communication for interoperability). These standards help agents share context and work cohesively, accelerating AI for complex operations management.

Thank you for rolling up your sleeves to learn AI with us. Join Us in the Just Start Campaign!

Learn more: juststart.preface.ai